Stefanie Kegel – UX Design & Psychologie

Hi, schön, dass du da bist.

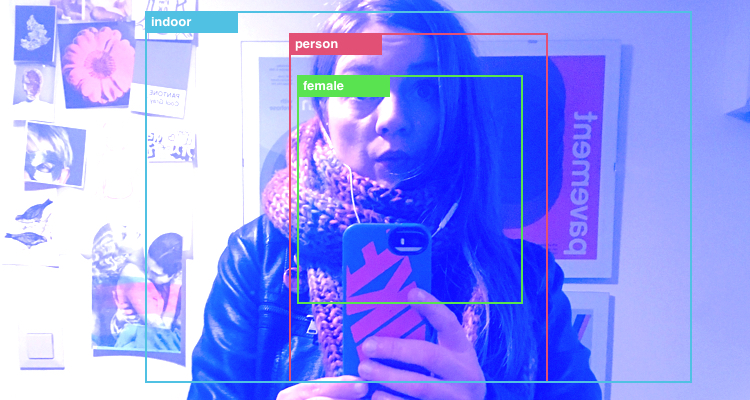

Als UX-Designerin mit einem Hintergrund in Psychologie verbinde ich User Experience mit Erkenntnissen aus Kognitions- und Sozialpsychologie. Mich interessiert nicht nur Interfacedesign, sondern auch, wie Design unser Verhalten prägt, Technologie Macht verteilt und wie wir digitale Produkte und Services schaffen, die wirklich einen Unterschied machen.

Dieser Blog exisitiert seit 2008 und dient mir u.a als Bookmarkingtool aber hier teile ich auch meine Gedanken zu UX, Ethik im Design, meinem nebenberuflichen Psychologiestudium und allem, was mich in diesem Bereich bewegt.

Viel Spaß beim Stöbern!